06/2025

Recent years have seen substantial improvements in the ability to generate synthetic 3D objects using AI. However, generating complex 3D objects, such as plants, remains a considerable challenge. Current generative 3D models struggle with plant generation compared to general objects, limiting their usability in plant analysis tools, which require fine detail and accurate geometry. We introduce PlantDreamer, a novel approach to 3D synthetic plant generation, which can achieve greater levels of realism for complex plant geometry and textures than available text-to-3D models. To achieve this, our new generation pipeline leverages a depth ControlNet, fine-tuned Low-Rank Adaptation and an adaptable Gaussian culling algorithm, which directly improve textural realism and geometric integrity of generated 3D plant models. Additionally, PlantDreamer enables both purely synthetic plant generation, by leveraging L-System-generated meshes, and the enhancement of real-world plant point clouds by converting them into 3D Gaussian Splats. We evaluate our approach by comparing its outputs with state-of-the-art text-to-3D models, demonstrating that PlantDreamer outperforms existing methods in producing high-fidelity synthetic plants. Our results indicate that our approach not only advances synthetic plant generation, but also facilitates the upgrading of legacy point cloud datasets, making it a valuable tool for 3D phenotyping applications.

Full Paper Link:

https://arxiv.org/abs/2505.15528

GitHub Link:

https://github.com/Lewis-Stuart-11/PlantDreamer

02/2025

The reconstruction of 3D plant models can offer advantages over traditional 2D approaches by more accurately capturing the complex structure and characteristics of different crops. Conventional 3D reconstruction techniques often produce sparse or noisy representations of plants using software, or are expensive to capture in hardware. Recently, view synthesis models have been developed that can generate detailed 3D scenes, and even 3D models, from only RGB images and camera poses. These models offer unparalleled accuracy, but are currently data hungry, requiring large numbers of views with very accurate camera calibration. In this study, we present a view synthesis dataset comprising 20 individual wheat plants captured across 6 different time frames over a 15-week growth period. We develop a camera capture system using two robotic arms combined with a turntable, controlled by a re-deployable and flexible image capture framework. We trained each plant instance using two recent view synthesis models: 3D Gaussian Splatting (3DGS) and Neural Radiance Fields (NeRF). Our results show that both 3DGS and NeRF produce high-fidelity reconstructed images of a plant subject from views not captured in the initial training sets. We also show that these approaches can be used to generate accurate 3D representations of these plants as point clouds, with 0.74mm and 1.43mm average accuracy compared with a handheld scanner for 3DGS and NeRF respectively. We believe that these new methods will be transformative in the field of 3D plant phenotyping, plant reconstruction and active vision.

10/2021

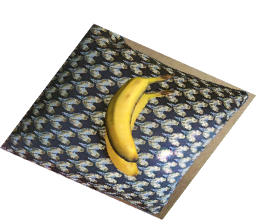

3D reconstruction of fruit is important as a key component of fruit grading and an important part of many size estimation pipelines. Like many computer vision challenges, the 3D reconstruction task suffers from a lack of readily available training data in most domains, with methods typically depending on large datasets of high-quality image-model pairs. In this paper, we propose an unsupervised domain-adaptation approach to 3D reconstruction where labelled images only exist in our source synthetic domain, and training is supplemented with different unlabelled datasets from the target real domain. We approach the problem of 3D reconstruction using volumetric regression and produce a training set of 25,000 pairs of images and volumes using hand-crafted 3D models of bananas rendered in a 3D modelling environment (Blender). Each image is then enhanced by a GAN to more closely match the domain of photographs of real images by introducing a volumetric consistency loss, improving performance of 3D reconstruction on real images. Our solution harnesses the cost benefits of synthetic data while still maintaining good performance on real world images. We focus this work on the task of 3D banana reconstruction from a single image, representing a common task in plant phenotyping, but this approach is general and may be adapted to any 3D reconstruction task including other plant species and organs.

11/2019

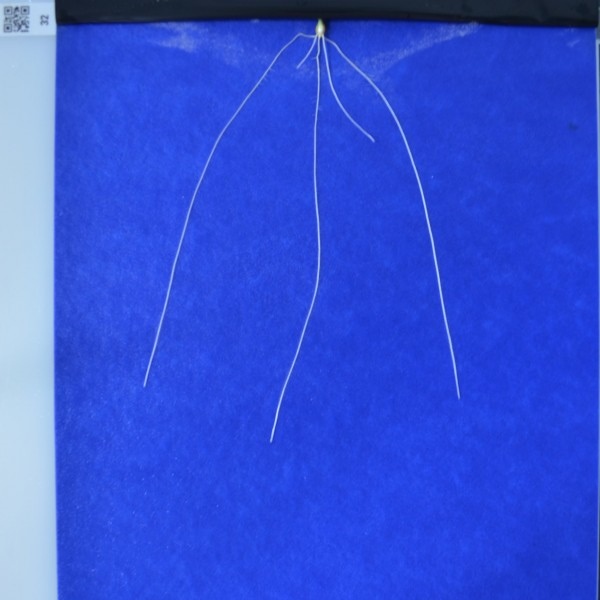

In recent years quantitative analysis of root growth has become increasingly important as a way to explore the influence of abiotic stress such as high temperature and drought on a plant’s ability to take up water and nutrients. Segmentation and feature extraction of plant roots from images presents a significant computer vision challenge. We present a new image analysis approach that provides fully automatic extraction of complex root system architectures from a range of plant species in varied imaging set-ups. Driven by modern deep-learning approaches, RootNav 2.0 replaces previously manual and semi-automatic feature extraction with an extremely deep multi-task convolutional neural network architecture. The network also locates seeds, first order and second order root tips to drive a search algorithm seeking optimal paths throughout the image, extracting accurate architectures without user interaction. Results: We develop and train a novel deep network architecture to explicitly combine local pixel information with global scene information in order to accurately segment small root features across high-resolution images. The proposed method was evaluated on images of wheat (Triticum aestivum L.) from a seedling assay. Compared with semi-automatic analysis via the original RootNav tool, the proposed method demonstrated comparable accuracy, with a 10-fold increase in speed. The network was able to adapt to different plant species via transfer learning, offering similar accuracy when transferred to an Arabidopsis thaliana plate assay. A final instance of transfer learning, to images of Brassica napus from a hydroponic assay, still demonstrated good accuracy despite many fewer training images.

Full Paper Link:

https://academic.oup.com/gigascience/article/8/11/giz123/5614712

GitHub Link:

https://github.com/robail-yasrab/RootNav-2.0

10/2017

Plant phenotyping has continued to pose a challenge to computer vision for many years. There is a particular demand to accurately quantify images of crops, and the natural variability and structure of these plants presents unique difficulties. Recently, machine learning approaches have shown impressive results in many areas of computer vision, but these rely on large datasets that are at present not available for crops. We present a new dataset, called ACID, that provides hundreds of accurately annotated images of wheat spikes and spikelets, along with image level class annotation. We then present a deep learning approach capable of accurately localising wheat spikes and spikelets, despite the varied nature of this dataset. As well as locating features, our network offers near perfect counting accuracy for spikes (95.91%) and spikelets (99.66%). We also extend the network to perform simultaneous classification of images, demonstrating the power of multi-task deep architectures for plant phenotyping. We hope that our dataset will be useful to researchers in continued improvement of plant and crop phenotyping. With this in mind, alongside the dataset we will make all code and trained models available online.